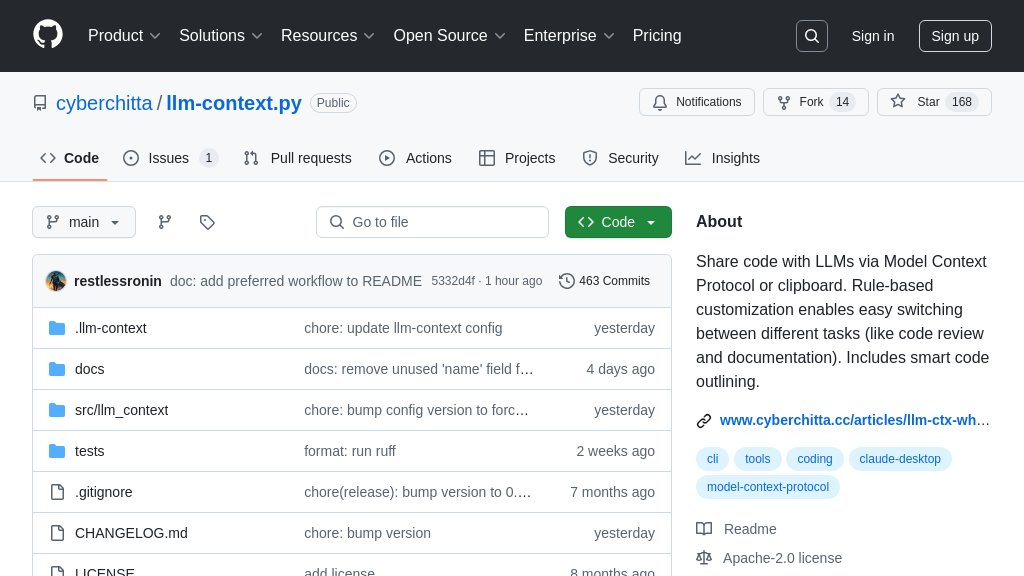

llm-context.py

llm-context.py: An MCP tool for seamless code integration with LLMs, enhancing AI-assisted development workflows.

llm-context.py Solution Overview

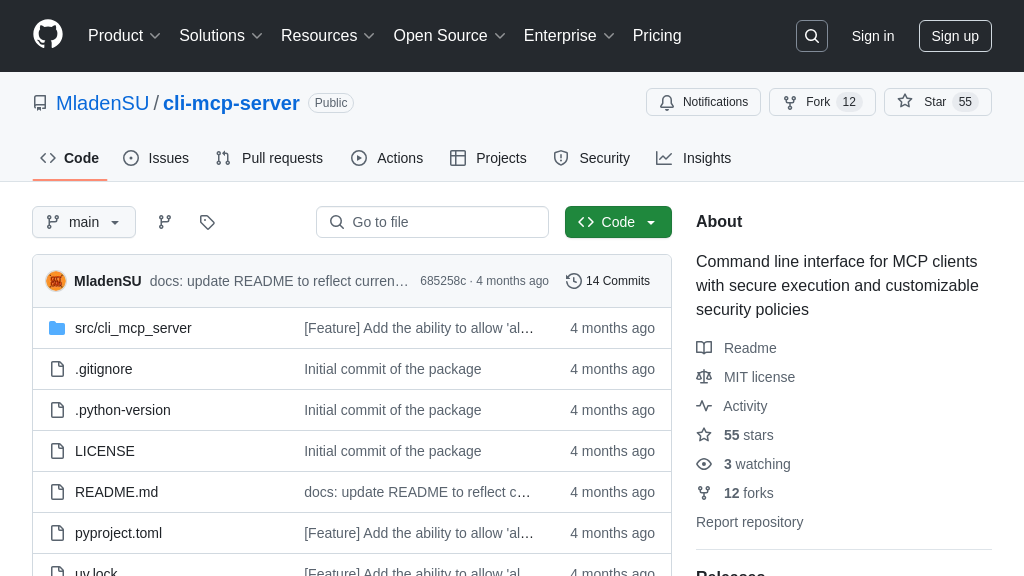

LLM Context is a developer tool designed to streamline the process of injecting relevant code or text from projects into Large Language Model (LLM) chats. It leverages .gitignore patterns for intelligent file selection and offers simplified clipboard workflows via the command line. Notably, it integrates directly with LLMs through the Model Context Protocol (MCP), enabling seamless communication.

Key features include a rule-based system for customizable context generation, intelligent code outlining, and definition implementation extraction. By using LLM Context, developers can ensure that LLMs have the necessary context to provide accurate and insightful responses, saving time and improving the quality of AI-assisted development.

The tool supports direct integration with Claude Desktop via MCP and works with any LLM chat interface through its CLI and clipboard functionality. It is optimized for projects that fit within the LLM's context window and is installed using uv tool install "llm-context>=0.3.0".

llm-context.py Key Capabilities

Intelligent File Selection

LLM Context leverages .gitignore patterns to intelligently select relevant files for inclusion in the LLM's context. This feature automatically filters out unnecessary files, such as build artifacts, dependencies, or sensitive information, ensuring that the LLM receives a focused and relevant set of files. By respecting .gitignore rules, the tool aligns with existing development workflows and reduces the manual effort required to curate the context. This ensures that the LLM is not overwhelmed with irrelevant information, leading to more accurate and efficient responses.

For example, when working on a Python project, the tool will automatically exclude files and directories specified in .gitignore, such as __pycache__, *.pyc, and venv, focusing the LLM's attention on the core source code and relevant configuration files. This is particularly useful in large projects where manually selecting files would be time-consuming and error-prone.

Technically, this is achieved by parsing the .gitignore file and using its patterns to filter the list of files in the project directory before generating the context for the LLM.

Streamlined Clipboard Workflow

LLM Context provides a simplified clipboard workflow for interacting with LLMs, especially those without direct MCP integration. The tool offers CLI commands to select files, generate context, and copy the generated context to the clipboard. This allows developers to quickly transfer relevant code snippets, documentation, or other project-related information to their LLM chat interface. The clipboard workflow is designed to minimize friction and enable a seamless interaction between the developer's local environment and the LLM.

Imagine a scenario where a developer is using a web-based LLM interface. They can use lc-sel-files to select the relevant files, then lc-context -p to generate the context with instructions and copy it to the clipboard. Finally, they can paste the context into the LLM chat window and ask their question. This eliminates the need to manually copy and paste individual files, saving time and effort.

The implementation involves using Python's pyperclip library to interact with the system clipboard, allowing the tool to programmatically copy the generated context for easy pasting into any application.

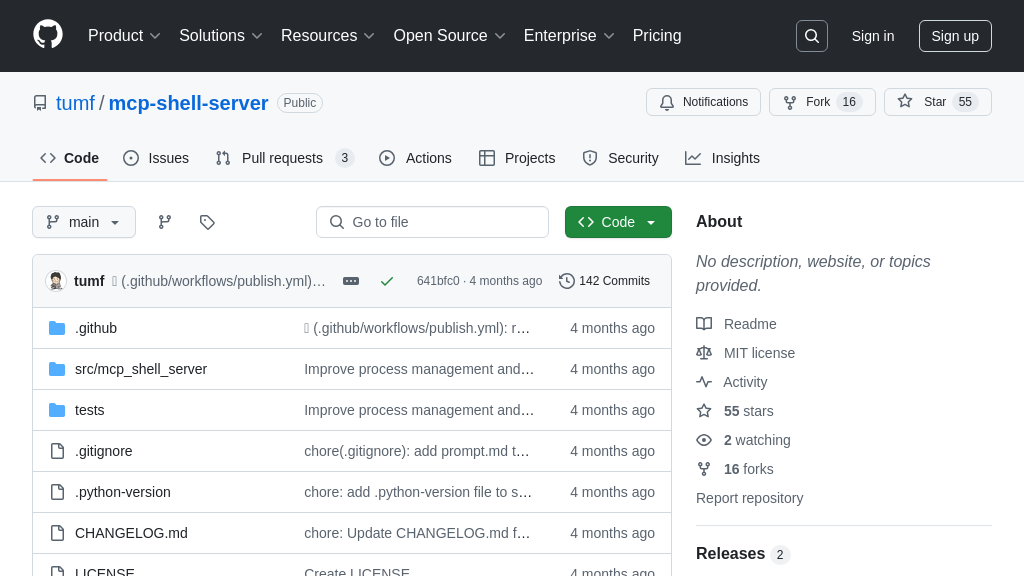

MCP-Based LLM Integration

LLM Context directly integrates with LLMs via the Model Context Protocol (MCP), enabling seamless and secure communication between the tool and the LLM. This integration allows for dynamic context updates, where the LLM can request additional files or information from the project as needed. By using MCP, LLM Context establishes a standardized communication channel, ensuring compatibility with various LLMs that support the protocol. This eliminates the need for manual context management and enables a more interactive and collaborative development experience.

For instance, the tool can be configured to work with Claude Desktop by adding a configuration snippet to claude_desktop_config.json. This allows Claude to directly request project context from LLM Context, providing a more integrated and efficient workflow. When Claude asks to "use my project", LLM Context will serve the relevant files.

The MCP integration is implemented using a server that listens for requests from the LLM and responds with the requested project context. The server is launched using the lc-mcp command and communicates with the LLM using the MCP protocol.

Smart Code Outlines

LLM Context can generate intelligent code outlines, allowing the LLM to quickly grasp the high-level structure of a codebase. This feature automatically identifies important definitions, classes, and functions, creating a concise summary of the code's organization. By providing the LLM with a code outline, the tool enables it to understand the relationships between different parts of the code and answer questions more effectively. This is particularly useful for large and complex projects where navigating the codebase can be challenging.

Consider a situation where a developer wants the LLM to review a large Python class. Instead of providing the entire class definition, they can use lc-outlines to generate an outline that highlights the key methods and attributes. This allows the LLM to focus on the most important aspects of the class and provide more targeted feedback.

The code outline generation is implemented using the tree-sitter library, which parses the code and extracts the relevant information based on predefined grammar rules. The extracted information is then formatted into a human-readable outline that can be easily understood by the LLM.

Definition Implementation Extraction

LLM Context offers the ability to extract the complete implementation of specific definitions requested by the LLM. Using the lc-clip-implementations command, developers can quickly retrieve the full code for a function, class, or other code element that the LLM is asking about. This feature is crucial for enabling the LLM to perform in-depth analysis and provide accurate suggestions, as it ensures that the LLM has access to all the necessary details. This is especially useful when the LLM needs to understand the inner workings of a particular function or class.

For example, if the LLM asks for the implementation of a specific function, the developer can copy the function name from the LLM's request, run lc-clip-implementations, and paste the function name. The tool will then extract the function's implementation and copy it to the clipboard, ready to be pasted back into the LLM chat.

This feature relies on parsing the code using tree-sitter to identify the start and end points of the requested definition. The tool then extracts the corresponding code block and copies it to the clipboard.