markitdown-mcp

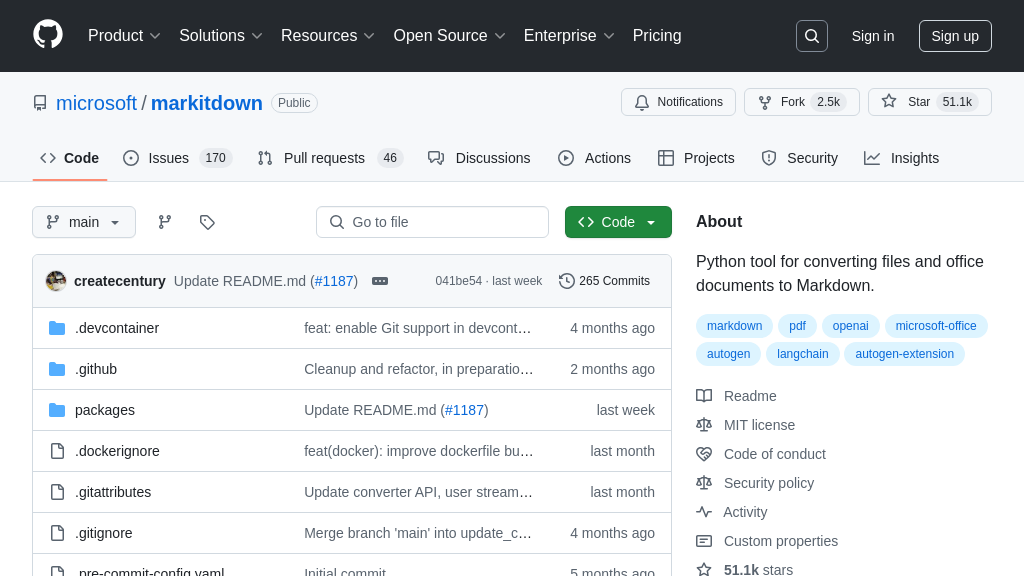

markitdown-mcp is an MCP Server utilizing the MarkItDown tool for converting diverse files into LLM-optimized Markdown. It enables AI models to directly ingest structured content via the Model Context Protocol, streamlining data preparation.

markitdown-mcp Solution Overview

Markitdown-mcp functions as a versatile MCP Server, built upon the robust MarkItDown conversion tool, designed to connect diverse data sources with AI models. It expertly transforms a wide range of file types—including PDFs, Microsoft Office documents (Word, Excel, PowerPoint), images (with OCR), audio (with transcription), and more—into clean, structured Markdown optimized specifically for Large Language Models. This process carefully preserves essential document structures like headings, lists, and tables, significantly enhancing the AI's contextual understanding. As an MCP Server, markitdown-mcp enables MCP-compatible Clients, such as AI applications or agents, to directly request and receive this processed content through a standardized protocol. This drastically simplifies the data ingestion workflow for developers, removing the need for bespoke parsers and allowing AI to seamlessly interact with information previously locked in various unstructured formats, boosting efficiency and model performance.

markitdown-mcp Key Capabilities

Versatile File-to-Markdown Conversion

Markitdown-mcp excels at converting a wide array of file formats into clean, structured Markdown. This core capability addresses the fundamental challenge of preparing diverse, often unstructured or complexly structured data (like PDFs, Word documents, PowerPoints, Excel sheets, images, audio files, and even web content like YouTube transcriptions) for consumption by Large Language Models (LLMs). The tool intelligently extracts text content while preserving crucial structural elements such as headings, lists, tables, and links, translating them into their Markdown equivalents. This process standardizes input data, making it significantly easier for developers to feed information from various sources into their AI pipelines without needing to build or maintain separate parsers for each file type. For instance, a developer building an RAG (Retrieval-Augmented Generation) system can use markitdown-mcp to convert a directory of .docx and .pdf research papers into Markdown, creating a uniform knowledge base that an LLM can easily process and query. Technically, it leverages specific libraries for each format (e.g., python-pptx, pypdf, openpyxl) managed through optional dependencies, allowing users to install only what they need.

LLM-Optimized Markdown Output

The Markdown generated by markitdown-mcp is specifically optimized for interaction with LLMs, prioritizing content structure and token efficiency over perfect visual fidelity for human readers. It leverages Markdown's inherent simplicity and proximity to plain text, which aligns well with how models like GPT-4o are trained and process information. By focusing on semantic structure (headings, lists, tables) rather than complex styling, it ensures that the core information and its relationships are clearly represented in a format the LLM natively understands. This approach enhances the model's ability to comprehend the context and extract relevant information accurately. Furthermore, the minimal markup reduces the number of tokens required to represent the content compared to more verbose formats like HTML or raw text dumps from complex files, potentially leading to faster processing and lower operational costs for LLM API calls. For example, converting a dense PDF report into this optimized Markdown format allows an AI model to quickly grasp the document's section hierarchy and key data points presented in tables, improving summarization or question-answering tasks.

Direct MCP Server Integration

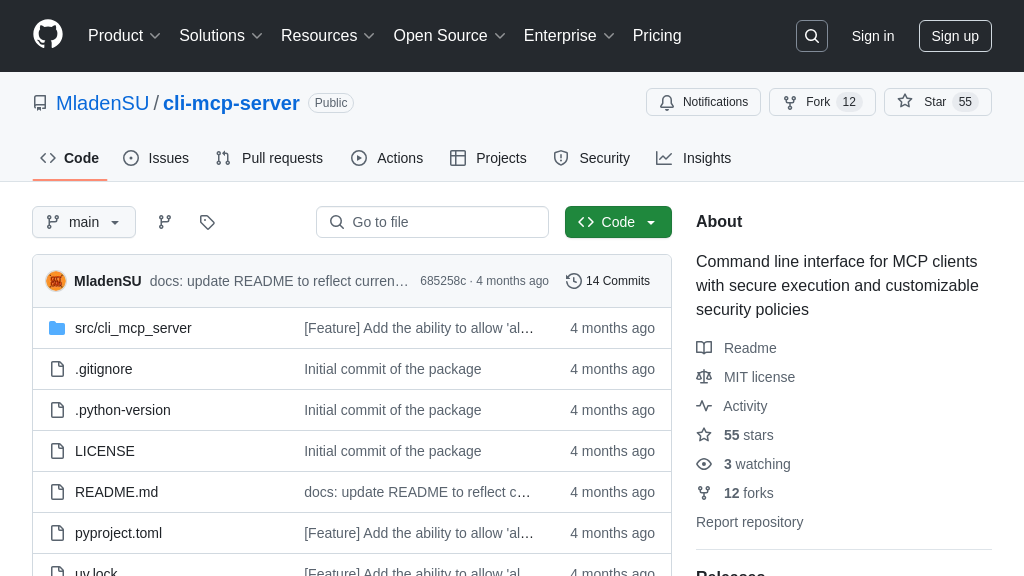

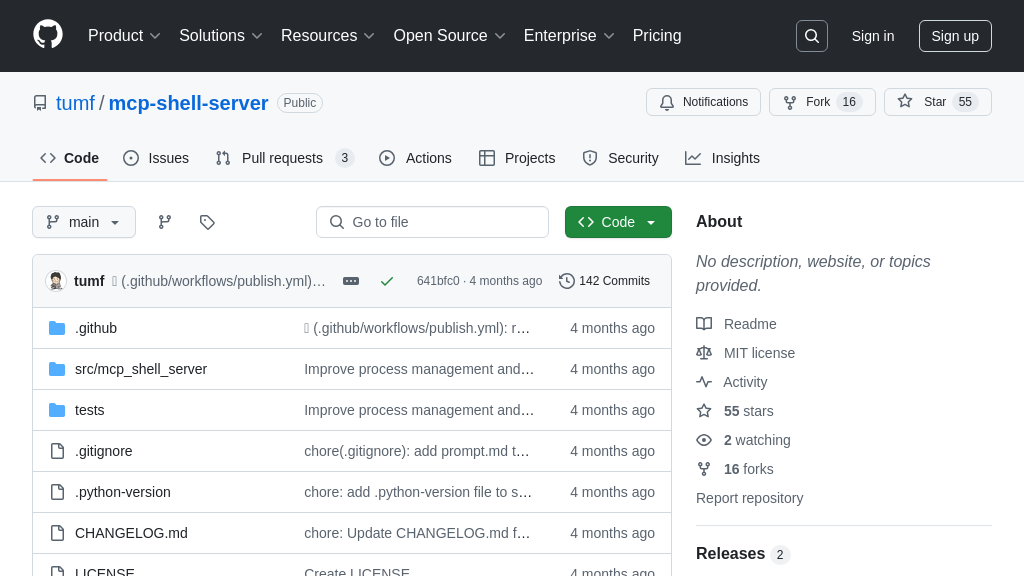

A key feature distinguishing markitdown-mcp within the MCP ecosystem is its built-in MCP server functionality. This transforms Markitdown from a standalone command-line or library tool into an active component capable of directly communicating with MCP clients, such as AI models or applications like Claude Desktop, using the standardized Model Context Protocol. Instead of requiring developers to manually run conversions and then pipe the output to an AI, the MCP server allows an AI application to request content conversion directly from markitdown-mcp as a service. The server listens for MCP requests, performs the requested file conversion (e.g., processing a provided PDF file path or stream), and returns the resulting Markdown content back to the client via the MCP connection (likely using mechanisms like standard I/O or HTTP/SSE as supported by MCP). This integration streamlines workflows significantly. For instance, an AI assistant integrated via MCP could directly ask the markitdown-mcp server to "convert the document 'latest_report.docx' to Markdown" and receive the structured text back seamlessly within the ongoing interaction context, ready for analysis or summarization.

Extensibility via Plugins and Integrations

Markitdown-mcp is designed for flexibility, offering robust extensibility through a plugin architecture and integrations with external services. Users can enable third-party plugins to handle niche file formats or add custom processing steps during conversion, allowing the tool's capabilities to grow beyond the core supported formats. Developers can create their own plugins by following the provided sample structure, tailoring Markitdown to specific project needs. Furthermore, markitdown-mcp integrates directly with powerful external AI services. It can leverage Azure Document Intelligence for potentially higher-fidelity conversion of complex documents like PDFs, utilizing advanced layout analysis and OCR capabilities. It also supports using LLMs (like GPT-4o via an OpenAI client) during the conversion process itself, specifically for tasks like generating descriptive text for images embedded within documents. This extensibility means developers aren't limited to the built-in parsers. For example, a team working with specialized medical image formats could develop a plugin to extract metadata, while also using the Azure Document Intelligence integration for processing scanned patient forms and the LLM integration to describe diagrams within .pptx presentations, all managed through markitdown-mcp.