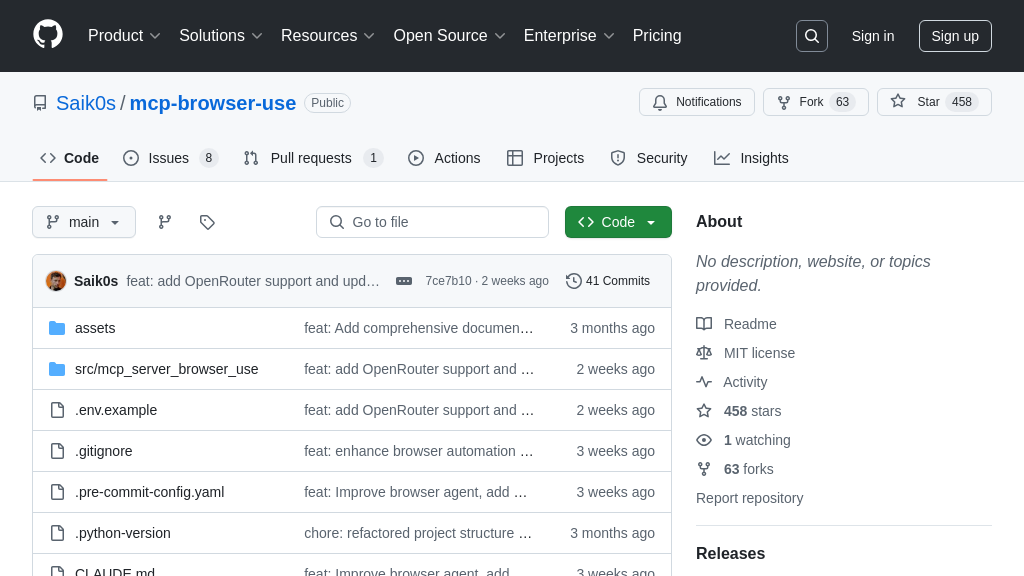

mcp-browser-use

mcp-browser-use is an MCP Server enabling AI models to perform browser automation and web research using natural language. It implements the Model Context Protocol for seamless integration with AI agents, offering tools for navigation, interaction, and deep search tasks.

mcp-browser-use Solution Overview

mcp-browser-use is a robust MCP Server enabling AI models to perform sophisticated browser automation and web research. As a core component in the MCP ecosystem, it acts as a bridge, allowing AI agents connected via MCP Clients to interact with the web using natural language. Key features include page navigation, form filling, and element interaction powered by the run_browser_agent tool, alongside a dedicated run_deep_search tool for comprehensive research report generation. It seamlessly integrates with multiple LLM providers and optionally leverages vision capabilities. For developers, this server drastically simplifies adding web interaction to AI applications, handling the complexities of browser control and LLM communication. Configuration is managed via environment variables, and it integrates easily with MCP Clients. It can operate its own browser instance or connect to a user's browser via Chrome DevTools Protocol (CDP), offering flexibility for development and debugging.

mcp-browser-use Key Capabilities

Natural Language Browser Automation

The mcp-browser-use server enables AI models, connected via MCP clients, to control a web browser using natural language instructions. This core functionality is primarily delivered through the run_browser_agent tool. When an MCP client invokes this tool with a task (e.g., "Find the contact email on example.com"), the server utilizes a configured Large Language Model (LLM) to interpret the request. The LLM then generates a sequence of browser actions (like navigating to a URL, clicking buttons, filling forms, scrolling, or extracting text). The server executes these actions using the Playwright browser automation library, potentially leveraging vision capabilities (MCP_USE_VISION=true) to analyze screenshots if the LLM supports it. This allows the AI to interact with websites dynamically, overcoming the limitations of static APIs. It effectively gives the AI "hands and eyes" on the web, solving the developer challenge of automating complex, non-API-driven web interactions. For instance, a developer could instruct an AI agent: "Log into my project management tool, find the ticket assigned to me yesterday, and summarize its description." The server manages the browser session, either launching its own instance (headless or headed) or connecting to the user's browser via CDP.

Automated Deep Web Research

Beyond simple browser tasks, mcp-browser-use offers a dedicated tool, run_deep_search, for conducting comprehensive, multi-step web research. When invoked with a research topic (e.g., "What are the latest market trends for renewable energy in Europe?"), this tool initiates an autonomous research process. It uses an LLM to break down the topic, formulate relevant search queries, execute searches, browse promising web pages, extract key information, and synthesize the findings into a structured report. This process often involves multiple iterations of searching and browsing, guided by the LLM's understanding of the evolving context. The tool is configurable regarding the depth of research, such as the maximum number of search iterations (MCP_RESEARCH_MAX_ITERATIONS) and queries per iteration (MCP_RESEARCH_MAX_QUERY). This feature provides significant value by automating time-consuming research tasks for AI models and developers, delivering synthesized information instead of just raw search results. An example use case is asking an AI assistant to "Compile a report on the main competitors for product X, including their key features and pricing, saving the report to the research folder." The final output is typically a Markdown file containing the structured report, saved to a configurable directory (MCP_RESEARCH_SAVE_DIR).

User Browser Integration (CDP)

A key technical feature of mcp-browser-use is its ability to connect to and control an existing Chrome/Chromium browser instance launched by the user, rather than always creating a new, isolated one. This is achieved using the Chrome DevTools Protocol (CDP). By setting the MCP_USE_OWN_BROWSER=true environment variable and specifying the browser's debugging address via CHROME_CDP (e.g., http://localhost:9222), the server can attach to the user's running browser. This offers several advantages for developers and AI models. Firstly, the AI agent can leverage the existing browser state, including cookies, logged-in sessions, and history, allowing it to interact seamlessly with websites requiring authentication without needing to handle the login process itself. Secondly, developers can directly observe the AI's actions within their familiar browser environment, aiding debugging and understanding. This solves the problem of automating tasks within authenticated sessions or complex web applications where starting from a clean slate is impractical. For example, a developer could use this to have an AI agent interact with their logged-in cloud console or a specific web-based development tool. When using CDP, settings like MCP_HEADLESS or MCP_KEEP_BROWSER_OPEN are typically ignored as control rests with the user-launched browser.

Flexible Multi-LLM Support

mcp-browser-use is designed for broad compatibility, integrating with a wide array of Large Language Models (LLMs) from numerous providers. Supported providers include OpenAI, Anthropic, Google (Gemini), Azure OpenAI, Mistral, Ollama (for local models), DeepSeek, OpenRouter (aggregator), Alibaba (DashScope), Moonshot, and Unbound AI. This flexibility is a core advantage, allowing developers to select the most suitable LLM based on factors like cost, performance, specific capabilities (e.g., vision support required by MCP_USE_VISION), context window size, or even data privacy preferences (using local models via Ollama). Configuration is managed entirely through environment variables. Developers specify the provider using MCP_MODEL_PROVIDER and the specific model using MCP_MODEL_NAME. Corresponding API keys (e.g., OPENAI_API_KEY, ANTHROPIC_API_KEY) and optional endpoint URLs must also be provided. This solves the problem of vendor lock-in and allows tailoring the AI's "brain" to the specific browser automation or research task at hand. For instance, a simple data extraction task might use a fast, inexpensive model, while a complex run_deep_search task could leverage a model with a larger context window and stronger reasoning capabilities, all configurable without changing the server code.