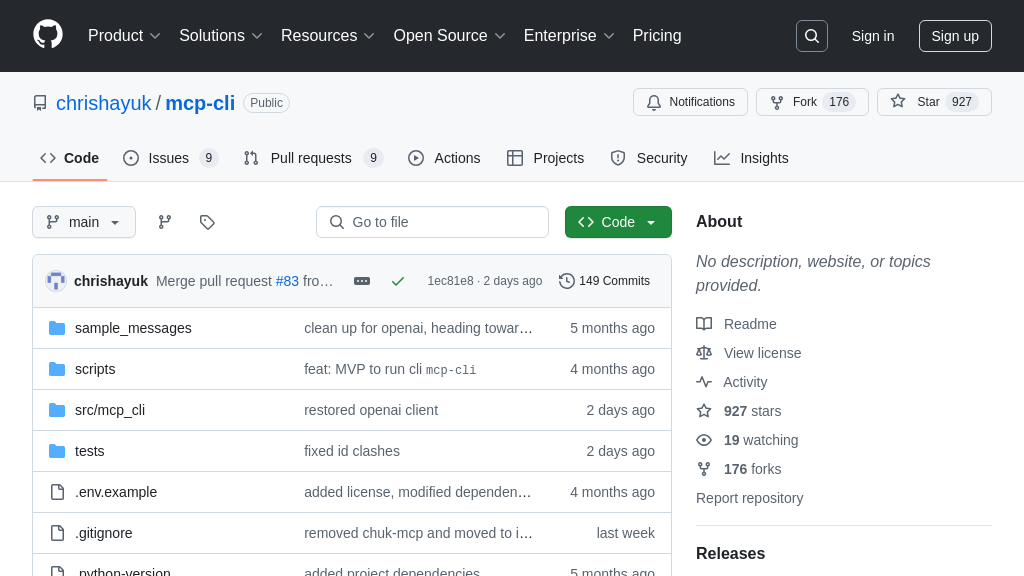

mcp-cli

mcp-cli: A versatile command-line client for seamless LLM interaction via the MCP protocol, supporting multiple modes and providers.

mcp-cli Solution Overview

The mcp-cli is a versatile command-line interface designed for seamless interaction with Model Context Provider servers. As a client within the MCP ecosystem, it empowers developers to leverage AI models through multiple operational modes, including chat, interactive, and command-line, catering to diverse use cases from direct LLM interaction to scriptable automation.

Key features include multi-provider support (OpenAI, Ollama), a robust tool system for automatic discovery and execution of server-provided tools, and advanced conversation management with history tracking and export capabilities. The mcp-cli enhances AI model functionality by providing a user-friendly interface for accessing and utilizing external data and services. It simplifies integration with AI models via the CHUK-MCP protocol library, offering a rich user experience with command completion, formatted output, and resilient resource management. This tool streamlines development workflows, enabling efficient AI model interaction and experimentation.

mcp-cli Key Capabilities

Multi-Mode Operation

The mcp-cli offers four distinct operational modes: Chat, Interactive, Command, and Direct Commands. Chat mode provides a conversational interface, enabling natural language interaction with the LLM and automated tool usage. Interactive mode allows users to directly execute server operations using slash commands. Command mode facilitates scripting and automation through a Unix-friendly interface, ideal for pipeline integration. Direct Commands allow for the execution of individual commands without entering a specific mode. This multi-mode design caters to diverse user preferences and use cases, from interactive problem-solving to automated workflows. For example, a data scientist can use Chat mode to explore a dataset, then switch to Command mode to automate a data processing pipeline.

Robust Tool System

mcp-cli features a robust tool system that enables AI models to interact with external resources and services. It automatically discovers tools provided by the MCP server, allowing the LLM to select and execute them as needed. The CLI tracks tool call history, providing insights into tool usage and enabling debugging. It supports complex, multi-step tool chains, allowing the LLM to orchestrate multiple tools to achieve complex goals. This system empowers AI models to perform tasks beyond their inherent capabilities, such as querying databases, accessing filesystems, or interacting with APIs. For instance, an AI model can use a "search_internet" tool to gather information, then use a "summarize" tool to condense the findings into a concise report.

Advanced Conversation Management

mcp-cli provides advanced conversation management features, including complete conversation history tracking, filtering, and JSON export capabilities. Users can view specific message ranges, save conversations for debugging or analysis, and compact conversation history to reduce token usage. The ability to save and analyze conversation history is particularly valuable for debugging complex interactions and improving the performance of AI models. Conversation compaction helps to manage context window limitations by summarizing previous interactions. For example, a developer can use the /save command to export a conversation to a JSON file, then analyze the file to identify areas where the AI model's responses could be improved.

Extensible Provider Support

The mcp-cli supports multiple LLM providers, including OpenAI and Ollama, and features an extensible architecture for adding additional providers. This allows users to choose the LLM that best suits their needs and to easily switch between providers as new models become available. The CLI handles provider-specific details, such as authentication and API endpoints, allowing users to focus on the task at hand. This flexibility is crucial in the rapidly evolving landscape of AI models. For example, a user can switch from OpenAI's gpt-4o to Ollama's llama3.2 with a simple command-line argument, allowing them to compare the performance of different models on the same task.

Resilient Resource Management

mcp-cli is designed with resilient resource management in mind, ensuring proper cleanup of asyncio resources, graceful error handling, and clean terminal restoration. It also supports multiple simultaneous server connections, allowing users to interact with multiple AI models and data sources concurrently. This robustness is essential for long-running tasks and complex workflows. The CLI's error handling capabilities prevent unexpected crashes and provide informative error messages to help users troubleshoot issues. For example, if a server connection is lost, the CLI will attempt to reconnect automatically and notify the user of the issue.