mcp-monitor

mcp-monitor: Real-time system metrics for AI models via MCP. Enhance AI decisions with system awareness.

mcp-monitor Solution Overview

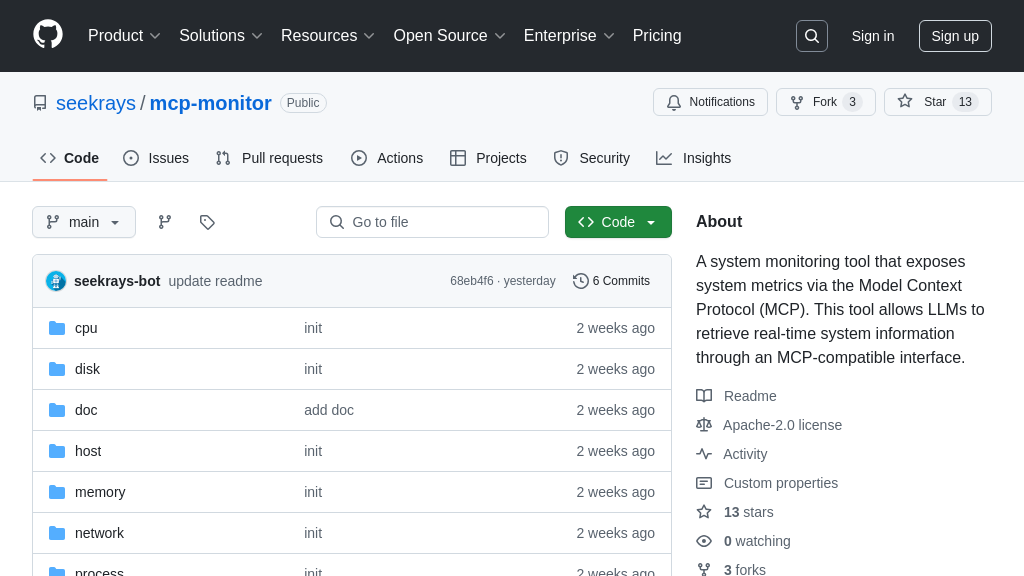

MCP-Monitor is a resource designed to provide real-time system metrics to AI models via the Model Context Protocol. As an MCP server, it equips Large Language Models with access to crucial system information, enhancing their decision-making capabilities and enabling context-aware applications.

This tool offers comprehensive monitoring, including CPU usage, memory consumption, disk I/O, network statistics, host details, and process information. AI models can seamlessly retrieve this data through MCP endpoints like get_cpu_info, get_memory_info, and get_network_info, using parameters to specify the scope of the query.

By integrating MCP-Monitor, developers empower their AI models to understand and react to their execution environment, leading to more efficient resource utilization and improved overall performance. It integrates via standard input/output, ensuring compatibility with any MCP-compliant client.

mcp-monitor Key Capabilities

Real-time System Monitoring

The mcp-monitor provides real-time system metrics to Large Language Models (LLMs) via the Model Context Protocol (MCP). It collects and exposes data related to CPU usage, memory consumption, disk I/O, network traffic, and host information. This allows LLMs to have up-to-date insights into the system's operational status. The tool acts as an MCP server, responding to requests from MCP-compatible clients. This ensures that the LLM's decision-making processes are informed by the most current system conditions, enabling context-aware applications. For example, an LLM tasked with optimizing resource allocation can use this data to dynamically adjust CPU or memory limits for different processes.

Technically, mcp-monitor leverages system calls and libraries to gather metrics, then formats this data into a standardized MCP response. The frequency of data updates depends on the LLM's request rate, ensuring minimal overhead while providing timely information.

Context-Aware AI Decision-Making

By providing real-time system information, mcp-monitor enables LLMs to make more informed and context-aware decisions. The LLM can dynamically adjust its behavior based on the current system load, available resources, and network conditions. For instance, if the CPU usage is high, an LLM might choose to defer less critical tasks or scale down resource-intensive operations. Similarly, if network latency is high, the LLM could opt to use local data caches instead of relying on remote data sources. This adaptability enhances the LLM's performance and reliability, especially in dynamic and resource-constrained environments.

Consider a scenario where an LLM is managing a web server. Using mcp-monitor, it can detect a sudden spike in network traffic and proactively allocate more resources to the web server to prevent performance degradation.

Automated Resource Optimization

mcp-monitor facilitates automated resource optimization by providing LLMs with the necessary data to intelligently manage system resources. The LLM can analyze the system metrics provided by mcp-monitor to identify bottlenecks, predict resource shortages, and optimize resource allocation. For example, if the LLM detects that a particular process is consuming excessive memory, it can automatically adjust the process's memory limits or migrate it to a less congested server. This automated optimization reduces the need for manual intervention and ensures that system resources are used efficiently.

Imagine an LLM managing a cluster of database servers. By monitoring disk I/O and CPU usage via mcp-monitor, the LLM can automatically rebalance the database shards across the cluster to optimize performance and prevent any single server from becoming overloaded.

Integration Advantages

mcp-monitor is designed for seamless integration with any MCP-compatible LLM client. It operates in stdio mode, simplifying the communication process. The tool exposes system metrics via standardized MCP endpoints, allowing LLMs to easily retrieve and interpret the data. This ease of integration reduces the development effort required to incorporate system monitoring capabilities into AI applications. Furthermore, the use of MCP ensures interoperability with other MCP-compliant resources and tools, fostering a cohesive and extensible AI ecosystem.

The mcp-monitor can be easily integrated into existing AI workflows by simply running the compiled binary and configuring the LLM client to communicate with it via MCP. This plug-and-play compatibility makes it a valuable asset for developers looking to enhance their AI models with real-time system awareness.