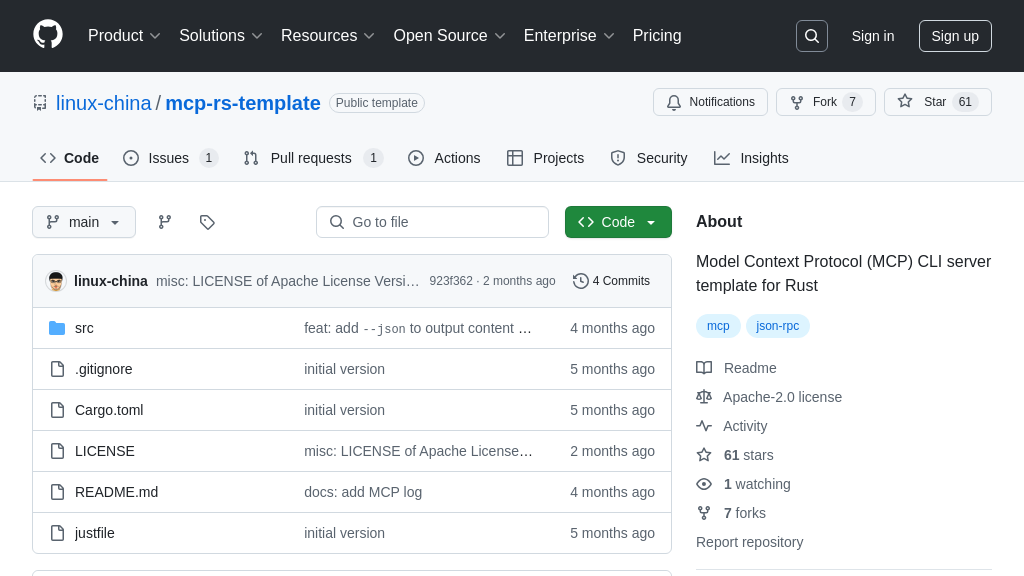

mcp-rs-template

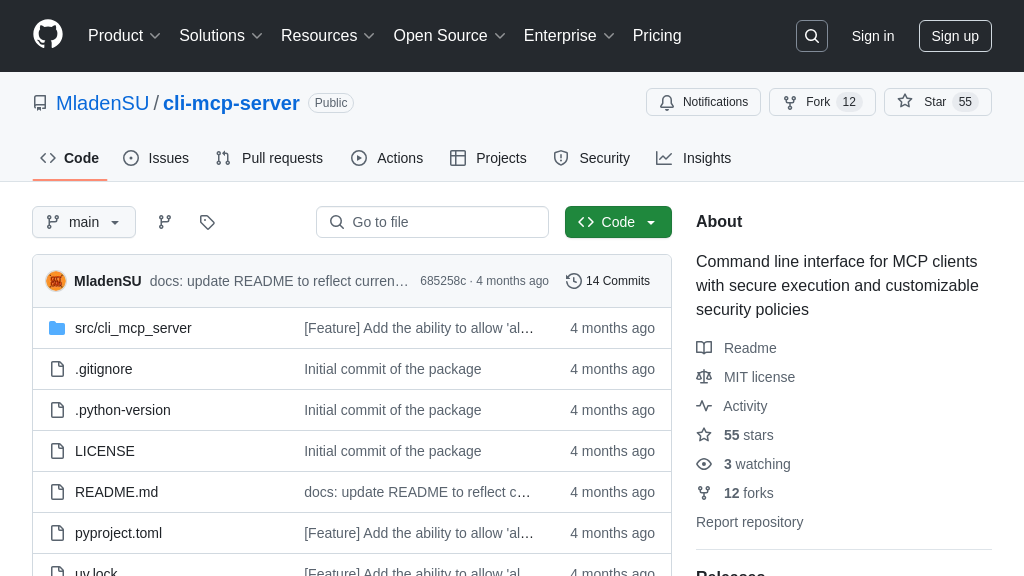

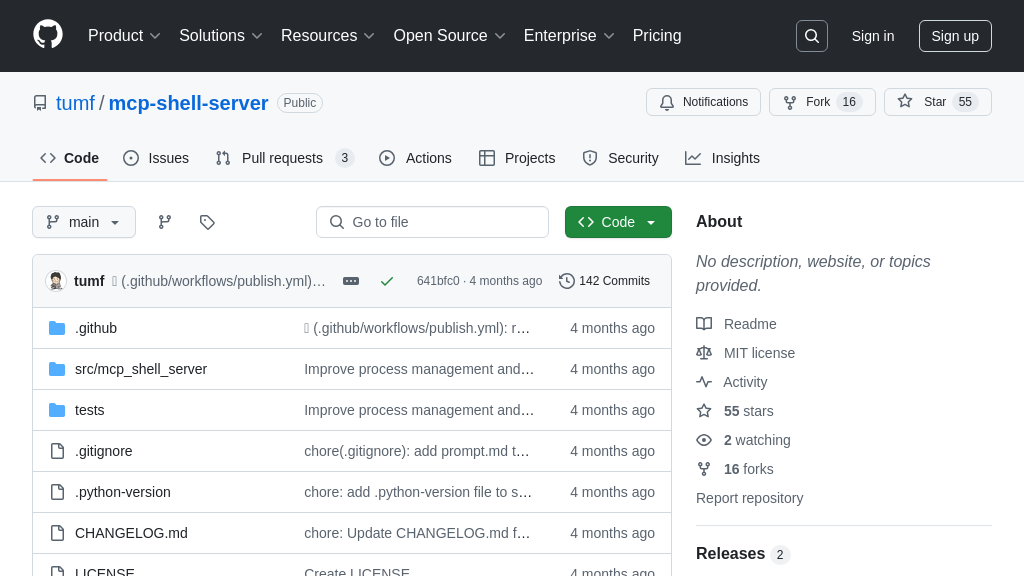

mcp-rs-template: Rust template for building MCP CLI servers, enabling seamless LLM integration with external resources.

mcp-rs-template Solution Overview

mcp-rs-template is a Rust-based application template designed to streamline the creation of MCP CLI servers. It empowers developers to seamlessly connect Large Language Models (LLMs) with external data sources and tools, enhancing AI model capabilities within AI-powered IDEs, chat interfaces, and custom AI workflows.

Leveraging the rust-rpc-router library, this template simplifies the implementation of JSON-RPC routing. Developers can easily define resources, prompts, and tools by modifying the provided modules and JSON templates. The template offers CLI options to enable the MCP server and display available resources, prompts, and tools.

By providing a standardized interface, mcp-rs-template eliminates integration complexities, allowing developers to focus on building innovative AI-driven applications. Its integration with Claude Desktop further exemplifies its utility in real-world scenarios. This template offers a robust foundation for building custom MCP servers, fostering a more connected and versatile AI ecosystem.

mcp-rs-template Key Capabilities

Rust-Based MCP Server Template

The mcp-rs-template provides a foundational structure for building MCP-compliant servers using Rust. It abstracts away the complexities of setting up a server, handling requests, and managing the communication layer, allowing developers to focus on implementing the specific logic for their resources, tools, and prompts. This template leverages the rust-rpc-router crate, which simplifies the creation of JSON-RPC endpoints, a common communication protocol in MCP. By using Rust, the template benefits from the language's performance, safety, and concurrency features, making it suitable for building robust and scalable MCP servers.

For example, a developer could use this template to create an MCP server that provides real-time stock data to an AI model. The template handles the incoming requests, routes them to the appropriate handler, and serializes the response back to the AI model. The developer only needs to implement the logic to fetch the stock data from an external API.

Standardized Resource/Tool Integration

This template enforces a standardized approach to integrating external resources and tools with AI models. By adhering to the MCP specification, the mcp-rs-template ensures that the server can seamlessly interact with any MCP-compliant client. This standardization simplifies the process of connecting AI models to a wide range of data sources and services, promoting interoperability and reducing integration costs. The template provides clear guidelines and examples for defining resources, tools, and prompts, making it easy for developers to extend the server with new functionalities.

Imagine a scenario where an AI-powered IDE needs to access code snippets from a remote repository. Using the mcp-rs-template, a developer can create an MCP server that exposes the repository as a resource. The IDE can then use the MCP client to request code snippets from the server, enabling the AI model to provide context-aware code suggestions.

Customizable Handlers & Data Sources

The mcp-rs-template offers a high degree of flexibility in customizing server handlers and integrating with various data sources. Developers can easily modify the provided handlers for prompts, resources, and tools to suit their specific needs. The template supports both in-memory data storage and integration with external databases or APIs. This flexibility allows developers to adapt the template to a wide range of use cases, from simple data retrieval to complex data processing and manipulation. The use of JSON files for prompts, resources, and tools allows for easy configuration and modification without recompilation.

For instance, a data scientist could use the template to build an MCP server that provides access to a proprietary dataset. They can customize the resource handlers to implement authentication and authorization, ensuring that only authorized AI models can access the data. The server can then be deployed as a microservice, providing a secure and scalable way to share the dataset with AI models.