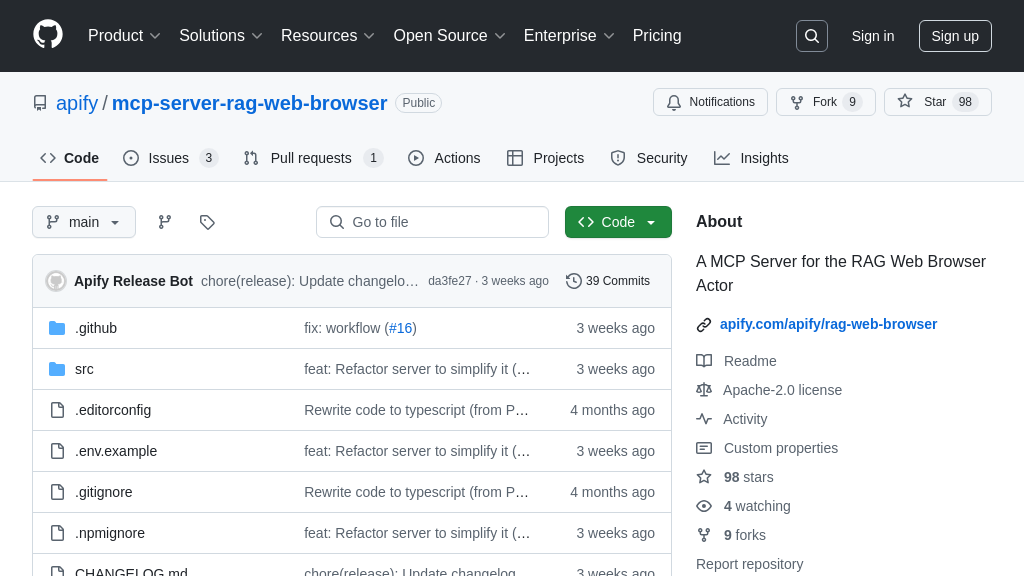

mcp-server-rag-web-browser

MCP server for AI agents, enabling web search and content extraction via RAG Web Browser Actor.

mcp-server-rag-web-browser Solution Overview

The mcp-server-rag-web-browser is an MCP server designed to enhance AI models with real-time web browsing capabilities. Acting as a bridge between AI agents and the internet, it allows models to perform web searches and extract content from web pages, similar to ChatGPT's web search feature.

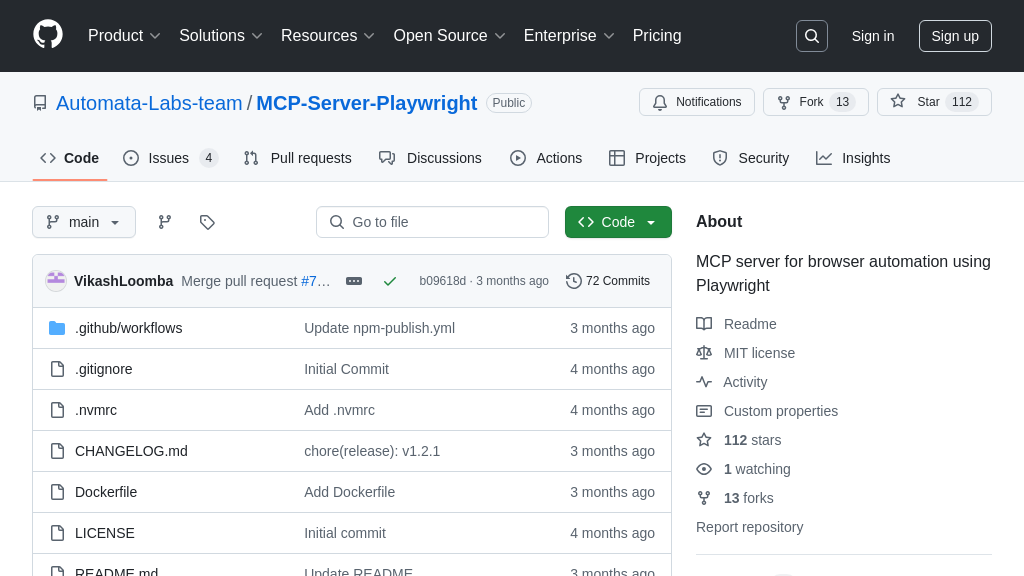

This server empowers AI agents to query Google Search, scrape the top URLs, and return cleaned content in Markdown format. It offers a search tool with customizable options, including the number of results, scraping tool (browser-playwright or raw-http), output formats, and request timeout. By integrating via standard input/output (stdio), it provides a straightforward connection for AI agents to access up-to-date information.

The core value lies in enabling AI models to access and process information beyond their training data, solving the challenge of knowledge cut-offs and improving response accuracy. Developers benefit from a ready-to-use solution that seamlessly integrates web browsing into AI workflows, enhancing the capabilities of AI applications.

mcp-server-rag-web-browser Key Capabilities

Web Search & Content Extraction

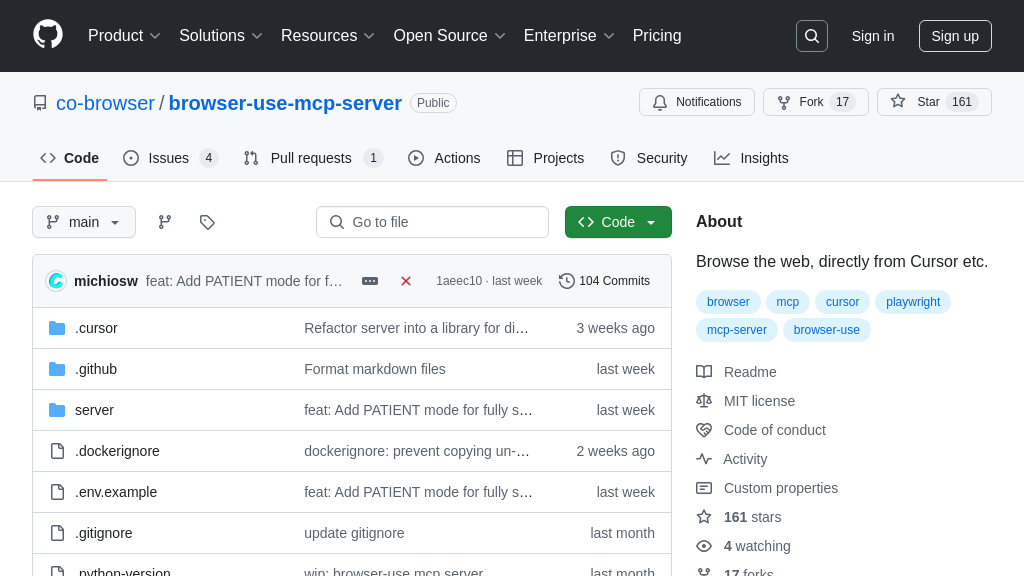

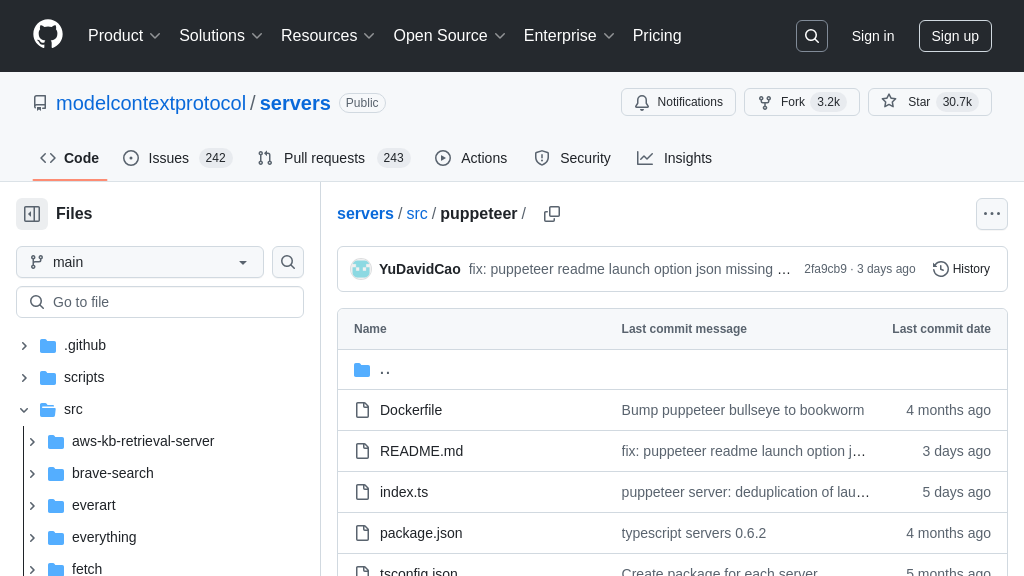

The core function of mcp-server-rag-web-browser is to enable AI models to perform web searches and extract content from web pages, effectively acting as a web browser for LLMs. It leverages the RAG Web Browser Actor to query Google Search based on a given search term, then scrapes the top N URLs from the search results. The extracted content is cleaned and formatted into Markdown, providing a structured and easily digestible format for the AI model. This allows the AI to access and process real-time information from the web, enhancing its knowledge base and enabling it to provide more accurate and up-to-date responses.

For example, an AI assistant could use this functionality to answer a user's question about the latest advancements in a specific technology. The server would search for relevant articles, extract the key information, and present it to the AI in a structured format, allowing it to formulate a comprehensive and informed response. The search tool uses query as a required argument, and maxResults, scrapingTool, outputFormats, and requestTimeoutSecs as optional arguments.

RAG Integration for LLMs

This MCP server is specifically designed to enhance Retrieval-Augmented Generation (RAG) pipelines. By providing a direct interface to web search and content extraction, it allows LLMs to augment their existing knowledge with real-time information retrieved from the internet. This is crucial for tasks that require up-to-date information or access to external data sources. The server acts as a bridge between the LLM and the vast amount of information available on the web, enabling more informed and contextually relevant responses.

Imagine a scenario where an AI model is used to generate marketing copy for a new product. By integrating with this MCP server, the AI can automatically research the latest market trends, competitor analysis, and customer reviews, and incorporate this information into the generated copy. This ensures that the marketing material is not only creative but also data-driven and aligned with current market conditions. The server communicates with the RAG Web Browser Actor in Standby mode, sending search queries and receiving extracted web content in response.

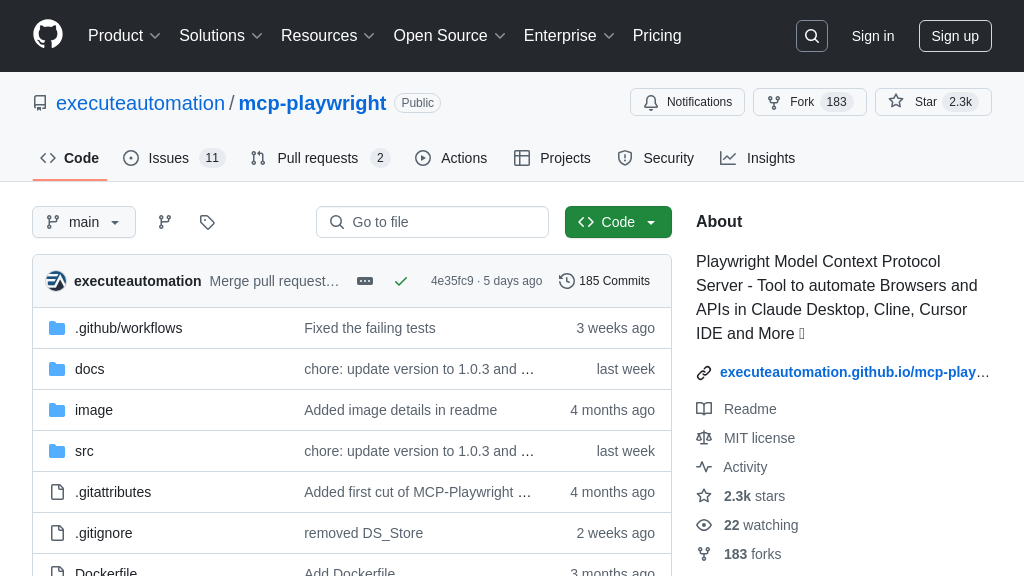

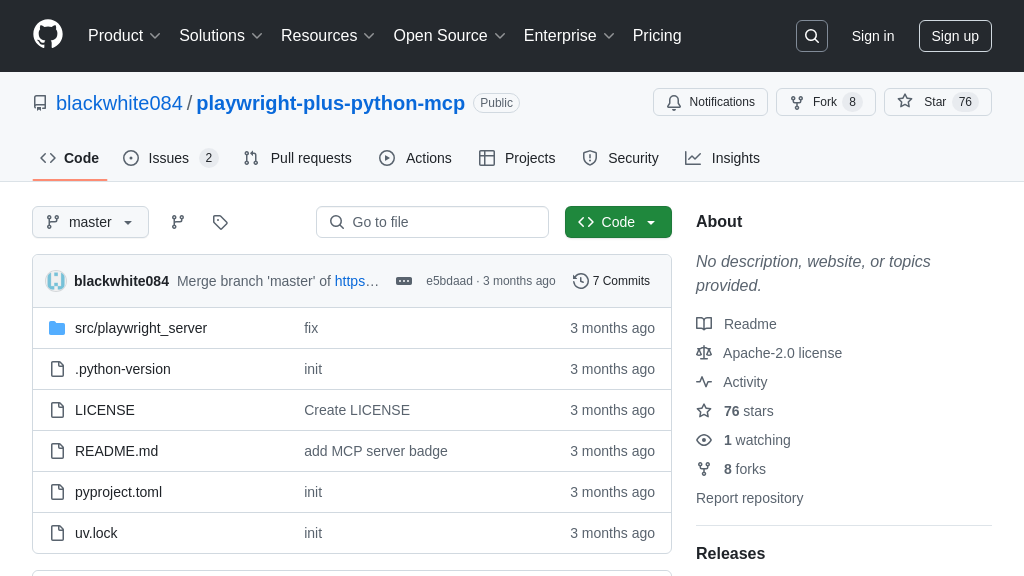

Local, Fast Response Server

The mcp-server-rag-web-browser is designed to run locally, providing fast and direct responses to AI agents and LLMs. This eliminates the latency associated with remote servers and ensures a seamless integration with local AI applications. The server communicates with AI agents via standard input/output (stdio), offering a straightforward and efficient connection. This local setup is particularly beneficial for applications that require real-time information retrieval and processing.

Consider a desktop AI assistant that needs to quickly access and process information from the web to answer user queries. By running the MCP server locally, the assistant can retrieve information with minimal delay, providing a responsive and user-friendly experience. The server is configured through the claude_desktop_config.json file, specifying the command and arguments for launching the server, along with environment variables like the Apify API token.

Customizable Scraping and Output

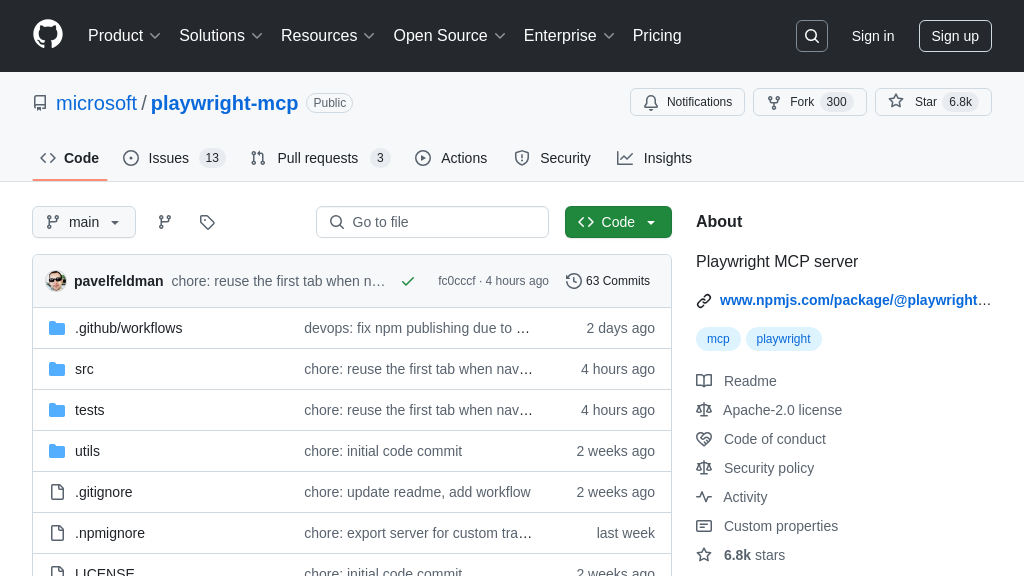

The server offers flexibility in how web content is scraped and formatted. Users can select different scraping tools (browser-playwright or raw-http) and output formats (text, markdown, or html) to tailor the extraction process to their specific needs. This allows for optimized content retrieval and formatting based on the target AI model and application. The ability to customize these parameters ensures that the extracted content is presented in the most efficient and usable format for the AI.

For instance, if an AI model is optimized for processing Markdown content, the server can be configured to output the extracted web content in Markdown format. Alternatively, if the AI requires raw HTML for more detailed analysis, the server can be configured accordingly. The scrapingTool and outputFormats arguments in the search tool provide this customization.

Integration Advantages

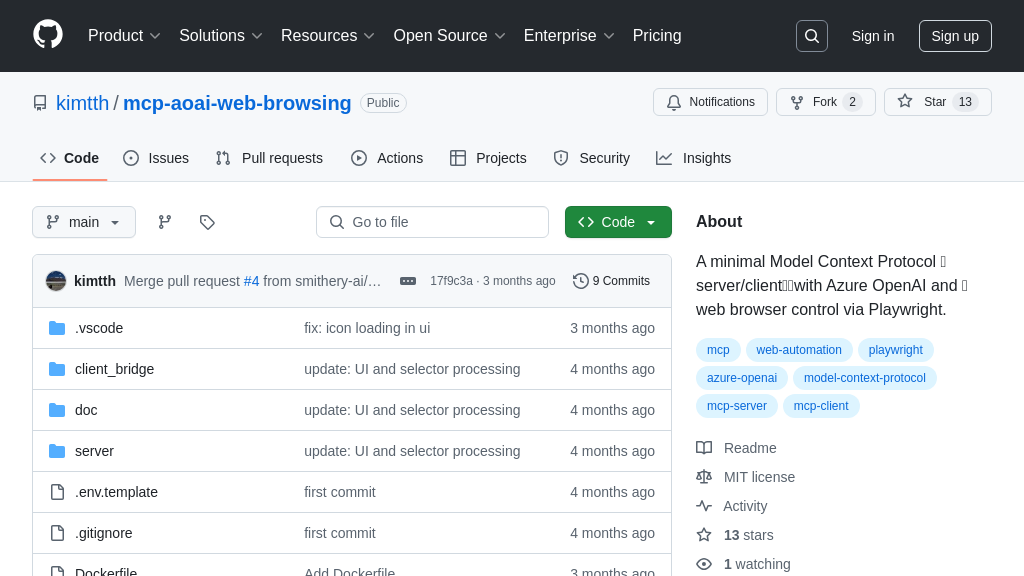

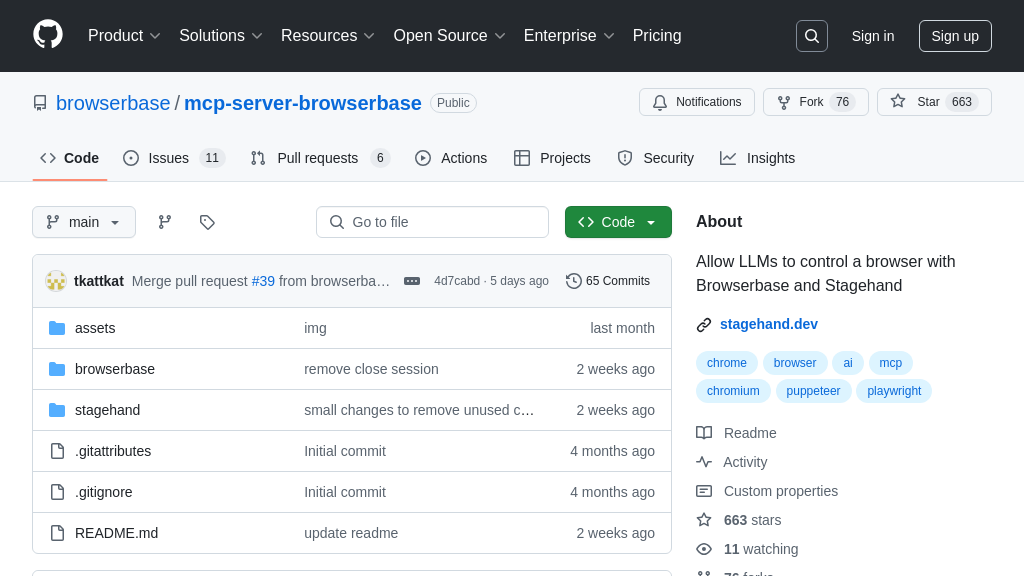

The mcp-server-rag-web-browser offers seamless integration with the MCP ecosystem and AI agents through standard input/output (stdio). This simplifies the process of connecting AI models to external data sources and tools. The server's compatibility with the MCP Inspector allows for easy debugging and monitoring, ensuring reliable performance. Furthermore, its integration with the Apify platform provides access to a wide range of specialized Actors, expanding the capabilities of AI agents.

For example, developers can use the MCP Inspector to test and debug the server's functionality, ensuring that it is correctly extracting and formatting web content. The server's integration with Apify Actors allows AI agents to leverage specialized tools for tasks such as data extraction, web automation, and more.