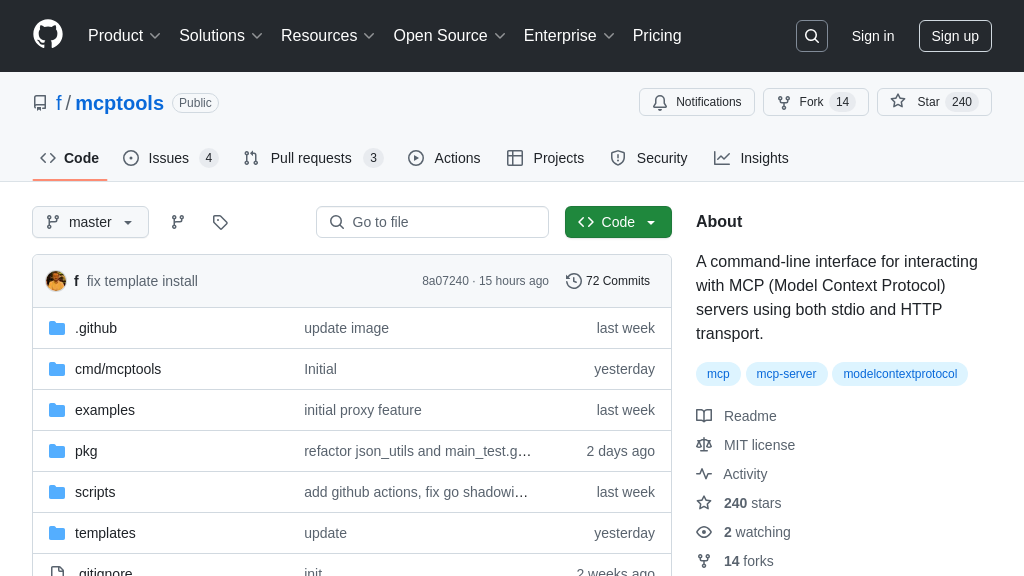

mcptools

MCPTools: A versatile CLI for seamless AI model integration via MCP. Discover, call, and manage tools and resources effortlessly.

mcptools Solution Overview

MCP Tools is a versatile command-line interface designed to streamline interactions with MCP servers. It empowers developers to effortlessly discover and utilize tools and resources exposed by these servers, enhancing AI model functionality. Key features include the ability to create mock servers for testing, proxy MCP requests to shell scripts for extensibility, and launch interactive shells for MCP server exploration.

This tool simplifies AI model integration by supporting multiple transport methods like HTTP/SSE and standard I/O, ensuring seamless communication. MCP Tools offers flexible output formatting (JSON, pretty-printed, table) to suit various needs. By providing project scaffolding with TypeScript support, it accelerates MCP project creation. Ultimately, MCP Tools reduces development time and complexity, making it an invaluable asset for developers working within the MCP ecosystem.

mcptools Key Capabilities

Tool Discovery and Invocation

mcptools enables users to discover and invoke tools exposed by MCP servers. It allows listing available tools, resources, and prompts, providing a clear understanding of the server's capabilities. Users can then call specific tools with parameters, leveraging the server's functionalities. This feature simplifies interaction with MCP servers, eliminating the need for manual protocol handling. For example, a developer can use mcp tools to see available tools on a file system server and then use mcp call read_file --params '{"path": "README.md"}' to read a specific file. This streamlines the process of accessing and utilizing functionalities provided by MCP servers, enhancing developer productivity. The tool uses JSON-RPC 2.0 over stdio or HTTP/SSE to communicate with the server.

Interactive Shell for MCP

The interactive shell feature provides a REPL (Read-Eval-Print Loop) environment for interacting with MCP servers. This allows users to explore server capabilities, call tools, and manage the interaction in a persistent session. The shell supports command history, tab completion, and help messages, making it easier to discover and use MCP functionalities. For instance, a data scientist can use mcp shell to connect to a server, list available tools using the tools command, and then repeatedly call different tools with varying parameters to analyze data. This interactive approach accelerates experimentation and debugging, improving the overall development workflow. The shell maintains a persistent connection to the MCP server, reducing overhead for repeated interactions.

Mock Server Creation

mcptools allows users to create mock MCP servers for testing client applications. This feature enables developers to simulate MCP server behavior without needing a fully implemented server. The mock server supports defining tools, prompts, and resources, allowing for comprehensive testing of client interactions. For example, a developer can use mcp mock tool hello_world "A simple greeting tool" to create a mock server with a simple tool and then test their client application against this mock server. This simplifies testing and development, especially in environments where a real MCP server is not available or practical. The mock server logs all requests and responses, aiding in debugging and validation.

Proxying Tools to Shell Scripts

The proxy mode in mcptools enables the registration of shell scripts or inline commands as MCP tools. This allows developers to extend MCP functionality using existing scripts without writing new code. The tool handles the MCP protocol, passing parameters as environment variables to the script and returning the script's output as the tool response. For example, a system administrator can register a script that restarts a service as an MCP tool and then call this tool from an AI model to automate system management tasks. This feature bridges the gap between MCP and existing infrastructure, enabling seamless integration of AI models with legacy systems. The proxy server configuration is stored in a JSON file, allowing for easy management and version control.