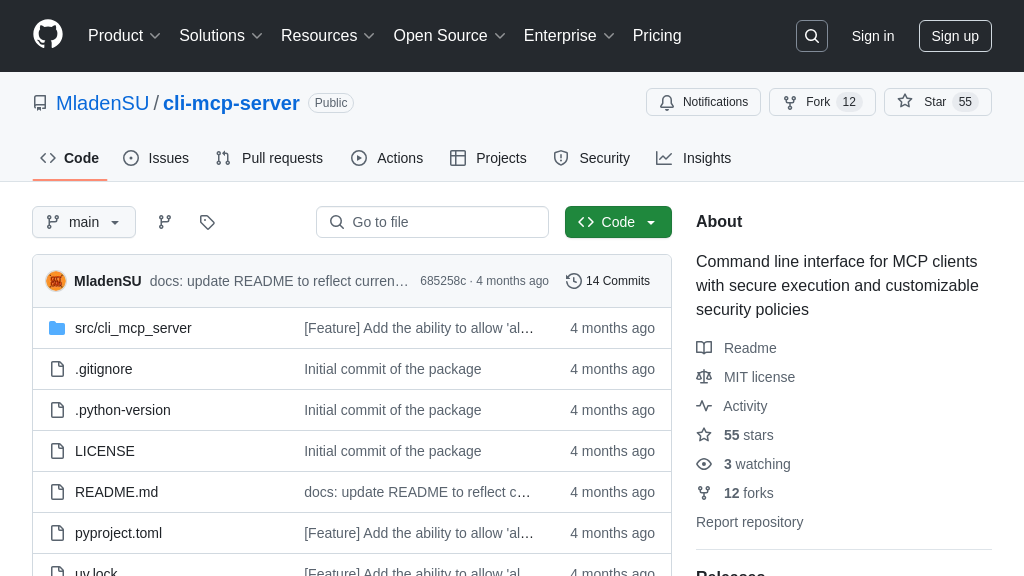

speech.sh

speech.sh: An MCP server for AI models, providing text-to-speech via OpenAI API. Enable your AI to speak!

speech.sh Solution Overview

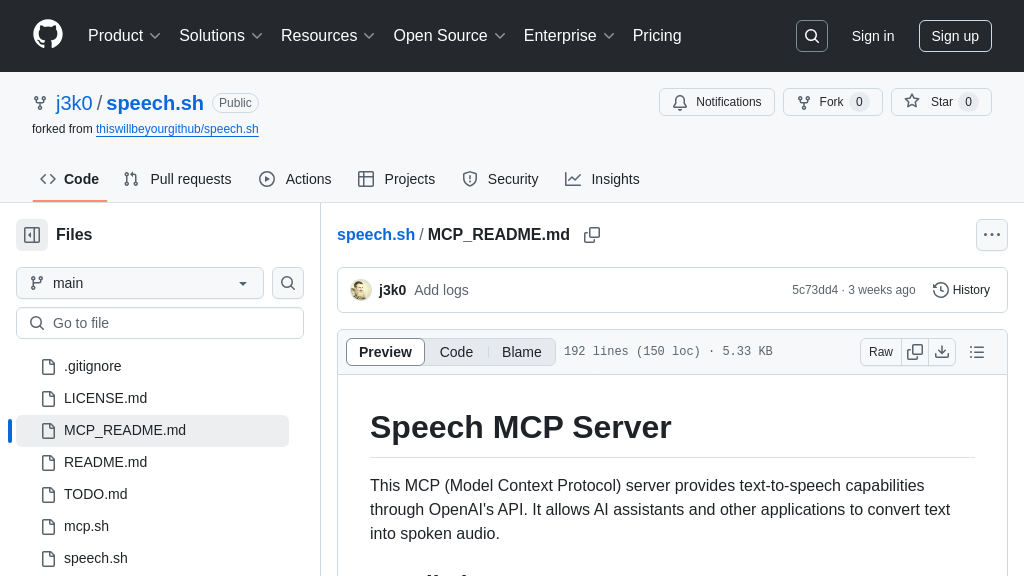

speech.sh is an MCP server that provides text-to-speech capabilities, enabling AI models to communicate audibly. It leverages the OpenAI API to convert text into spoken audio, addressing the need for voice output in AI applications. This server offers a simple "speak" tool, which takes text as input and plays the corresponding audio through the device's speakers.

The solution simplifies integration by handling API communication, caching, and audio playback. Developers can configure voice, speed, and model via environment variables. By using JSON-RPC requests, AI models can seamlessly call the "speak" tool, making it ideal for AI assistants like Claude to directly interact with users. The server also incorporates security measures and detailed logging for troubleshooting. It can be launched directly or integrated into client applications using standard input/output.

speech.sh Key Capabilities

Text-to-Speech Conversion via API

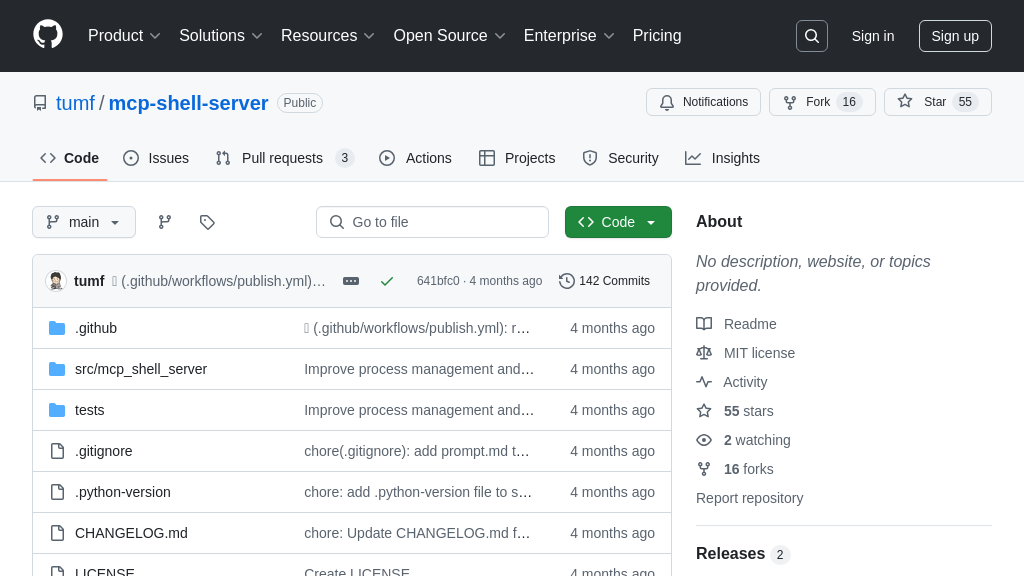

The core function of speech.sh is to provide a simple API endpoint for converting text into spoken audio using OpenAI's TTS models. It receives a JSON-RPC request containing the text to be spoken, utilizes the OpenAI API to generate the corresponding audio data, and then plays the audio through the system's speakers. The process is initiated when the server receives a tools/call request with the method name "speak" and the text as an argument. The server then constructs an API request to OpenAI, sends the text for processing, and receives the audio data. Finally, it uses a local audio player (ffmpeg or mplayer) to play the generated speech.

This functionality is crucial for AI assistants or applications that need to communicate with users audibly. For example, a customer service chatbot could use this to read out responses to user queries, providing a more natural and engaging interaction. Imagine a smart home system using speech.sh to announce incoming calls or read out news headlines. The implementation relies on curl for API communication, jq for JSON parsing, and either ffmpeg or mplayer for audio playback. The choice of voice, speed, and model can be configured using environment variables.

Configurable Voice and Speed

speech.sh allows users to customize the voice and speed of the generated speech through environment variables. This enables tailoring the audio output to specific preferences or application requirements. The SPEECH_VOICE variable determines the voice used for the speech, with options like "alloy," "echo," "fable," "onyx," "nova," and "shimmer." The SPEECH_SPEED variable controls the speed of the speech, ranging from 0.25 to 4.0. By adjusting these parameters, developers can fine-tune the audio experience to match the persona of their AI assistant or the specific needs of their application.

For instance, a children's educational app might use a faster, more playful voice like "fable," while a professional assistant might prefer a more serious tone like "onyx." A user with impaired hearing might benefit from a slower speech rate for better comprehension. The configuration is straightforward, requiring only the setting of environment variables before launching the server. This flexibility enhances the usability and adaptability of speech.sh in various contexts. The script reads these environment variables at startup and passes them as parameters to the OpenAI API.

Asynchronous Speech Generation

The speech generation process in speech.sh runs in the background, ensuring that the API response returns immediately without blocking the server. This asynchronous behavior is crucial for maintaining responsiveness, especially when handling multiple requests concurrently. When a "speak" request is received, the server initiates the speech generation process in a separate process. This allows the server to continue processing other requests while the audio is being generated and played.

This feature is particularly valuable in scenarios where multiple AI assistants or applications are interacting with the server simultaneously. For example, in a smart home environment with multiple devices requesting speech output, the asynchronous processing ensures that each request is handled promptly without causing delays. The implementation uses background processes and logging to manage the speech generation, allowing the main server process to remain responsive. The server captures the output of each speech request in separate log files, facilitating debugging and troubleshooting.