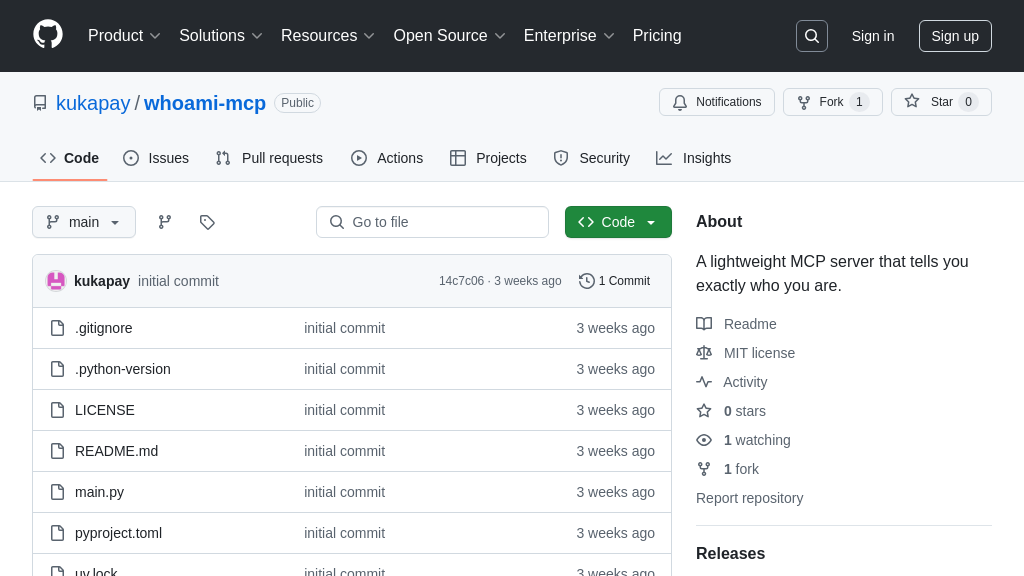

whoami-mcp

whoami-mcp: A lightweight MCP server for quick user identification in AI model integrations. Returns the system username.

whoami-mcp Solution Overview

WhoAmI-MCP is a lightweight MCP server designed to seamlessly integrate with local Large Language Models (LLMs). This tool serves as a simple yet effective resource, providing the system's username as your identity. Its core function is to quickly retrieve the current system user's username, enabling rapid synchronous execution, ideal for local LLM integrations where user context is needed.

Built with Python, WhoAmI-MCP is easily configurable via command-line arguments. By configuring your MCP client with the provided command and arguments, you can effortlessly call the "whoami" tool and obtain the system username. This solution streamlines the process of incorporating user identity into your AI workflows, offering a straightforward approach to personalize interactions and enhance the relevance of AI-driven tasks. Its simplicity and ease of integration make it a valuable asset for developers seeking to add a personal touch to their local AI applications.

whoami-mcp Key Capabilities

Simple Identity Retrieval

The core function of whoami-mcp is to provide a straightforward way to retrieve the current system user's username. It operates by executing a simple Python script that fetches the username from the operating system. This information is then returned to the calling MCP client. The process is designed to be lightweight and fast, making it suitable for scenarios where quick identity verification is needed within an AI workflow. This eliminates the need for complex authentication mechanisms when the AI model simply needs to know the user context in which it is operating.

Use Case: A local Large Language Model (LLM) integrated with whoami-mcp can personalize responses based on the identified user. For example, the LLM could tailor its answers or access user-specific files based on the username retrieved.

Local LLM Integration

Whoami-mcp is particularly valuable for integrating with local LLMs due to its simplicity and speed. It allows these models to quickly determine the user context without relying on external authentication services or complex identity management systems. This is especially useful in development or testing environments where a full-fledged authentication setup might be overkill. The fast execution time ensures that the identity retrieval process doesn't introduce noticeable latency into the LLM's operations. This enables a more seamless and responsive user experience.

Use Case: During the development of a local LLM, whoami-mcp can be used to simulate different user environments for testing purposes. This allows developers to verify that the LLM behaves correctly under various user contexts without needing to create multiple user accounts or configure complex authentication schemes.

Streamlined User Context

By providing a simple and direct way to access the system username, whoami-mcp streamlines the process of establishing user context for AI models. This eliminates the need for developers to implement custom solutions for retrieving this basic but essential piece of information. The standardized approach offered by whoami-mcp ensures consistency across different environments and simplifies the integration process. This allows developers to focus on the core functionality of their AI models rather than spending time on low-level system interactions.

Use Case: In a data analysis pipeline, whoami-mcp can be used to track which user initiated a particular analysis. This information can be valuable for auditing purposes or for attributing results to specific individuals. The simplicity of whoami-mcp makes it easy to integrate into existing pipelines without adding significant overhead.